Data Engineer SQL Interview Questions From Top Employers

Categories:

Written by:

Written by:Irakli Tchigladze

In this article, we will list important SQL concepts and walk you through 10 data engineer SQL interview questions asked by employers like Google and Amazon.

Companies collect and maintain large volumes of data to better understand their customers and improve various facets of their business. However, raw data can be a bit messy and disorganized. Companies need data engineers to refine initially collected data so that it’s easier to work with.

Data can be used for various tasks - analysis, visualization, or fed into machine learning models, just to name a few. Data engineers prepare data according to the specifics of the task so that data scientists can utilize the data instead of fixing its inconsistencies.

To do this, data engineers need to have a specific set of skills. In this article, we will discuss SQL - the main programming language for working with relational databases. We’ll go over the most important SQL concepts tested in SQL interview questions and walk you through data engineer SQL interview questions asked at top organizations today.

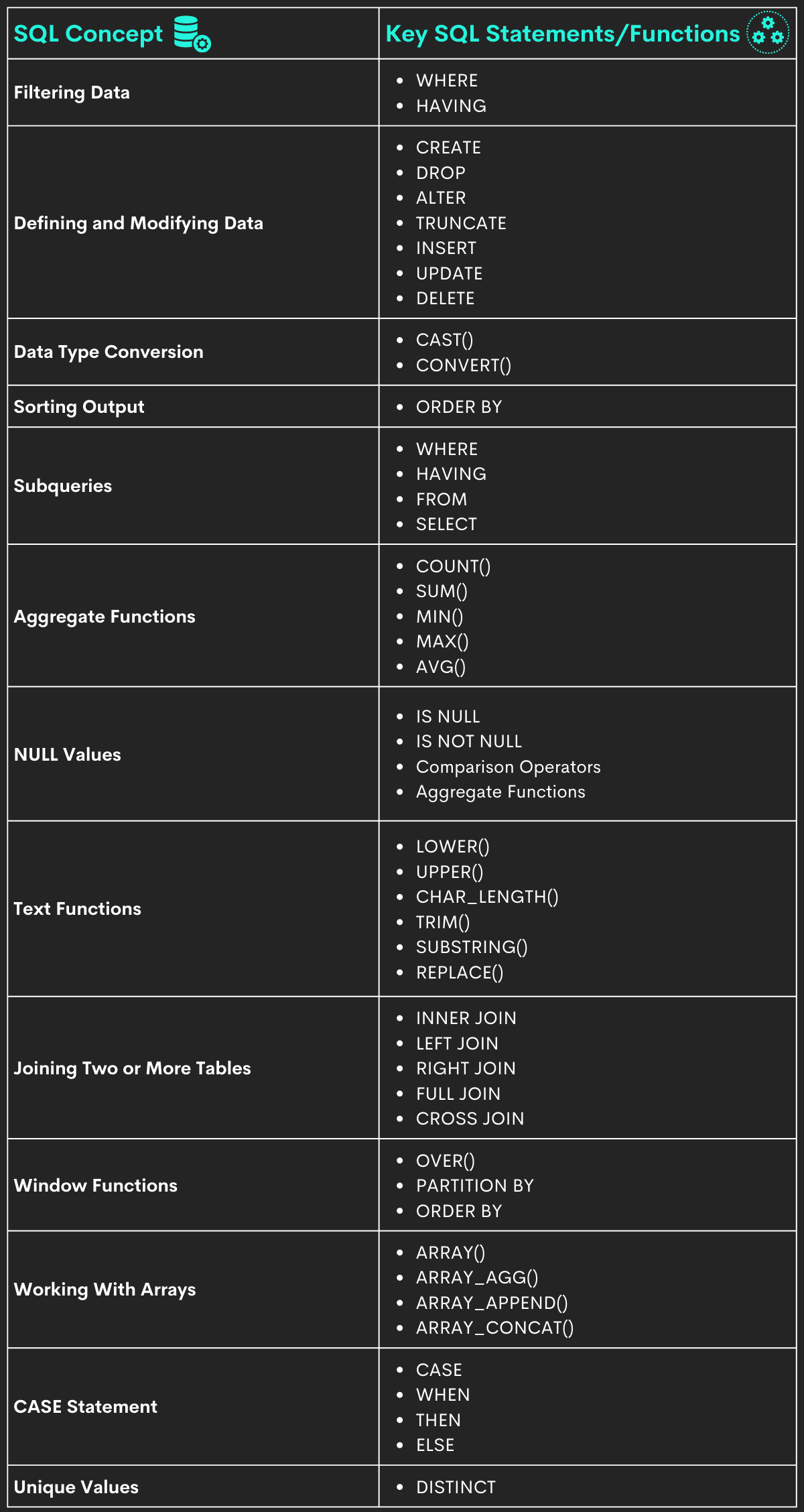

Concepts tested in data engineer SQL interview questions

Data engineers perform a wide variety of tasks. Few examples: validating and ordering data, handling edge cases, implementing consistent formatting, fixing bad data, and changing data types.

Data engineers will most likely use a combination of programming languages and tools. SQL and Python are two of the most important skills. StrataScratch blog has published an article about Python Data Engineer Interview Questions as well.

We compiled a list of SQL concepts most often used in data engineers’ day-to-day jobs. If you’re preparing for an interview, make sure to master these concepts. Data engineer SQL Interview questions often require a strong knowledge of these features.

We will start with easier concepts and cover difficult ones as well.

Filtering

As a data engineer, you must know how to use the WHERE clause to filter tables. It is usually paired with a condition and returns only rows that satisfy the condition.

It’s important to know how to set up a condition for the WHERE clause and how to couple it with SELECT, UPDATE and DELETE statements.

HAVING is another important filtering feature of SQL. Unlike WHERE, it is used to filter aggregated group data. It’s important to know the differences between WHERE and HAVING clauses and when to use them.

DDL and DML

Data Definition Language (DDL) and Data Manipulation Language (DML) allow data engineers to define and modify database structure - create new tables and objects, specify their name, define columns and specify types of values in them. This is a very short list of what data engineers can do using DDL and DML.

Data Type Conversion

Sometimes, data engineers have to convert values to another type. This may be necessary to perform certain SQL operations like comparison.

To be an effective data engineer, you need to know the difference between implicit and explicit type conversions. It’s important to know how to use CAST(), and CONVERT() functions for explicit conversion, and even better if you can explain the differences between these two functions. This will set you apart from other candidates interviewing for the same job.

You should also be prepared to explain implicit conversions and when they occur. This is important because one unexpected data conversion could lead to errors that are hard to identify and resolve.

ORDER BY

Arranging data using the ORDER BY statement is a basic but important SQL skill. Sometimes complex data engineering tasks can be solved using a simple ORDER BY statement.

The basic syntax of the ORDER BY statement is simple - you need to specify column values and the order (ascending or descending) to arrange rows. Advanced data engineer SQL interview questions might ask you to order rows based on values in multiple columns.

Subqueries

Any candidate interviewing for a data engineer job should know what subqueries are and how to write them.

Sometimes subqueries are described as a query in another query. They allow us to perform complex SQL operations.

Data engineers should also know how to use subqueries with various SQL features: WHERE, HAVING, FROM, SELECT.

Aggregate functions

An essential SQL feature to summarize large volumes of data. Aggregate functions are often used with GROUP BY statements to separate rows into groups and aggregate values for each group. Other times, you’ll need to aggregate data for the entire table.

There are five main aggregate functions in SQL:

- SUM() finds the total sum of all numeric values in a column.

- COUNT() finds the number of rows in the entire table or a group

- MIN and MAX() are used to find the highest and lowest values in a table or a group.

- AVG() calculates the average of all values in the column or a group

You can use these aggregate functions within a subquery to set conditions for WHERE and HAVING clauses.

You can find many examples of SQL Interview Questions involving aggregate functions on our blog.

In this article, we’ll solve interview questions in PostgreSQL, which has a wide variety of aggregate functions. Go to PostgreSQL documentation for a full list.

NULL values

Data engineers sometimes have to do what other data scientists don’t want to do - deal with NULL values.

Removing or replacing NULL values can improve the quality of data, which can make data more accessible and easy to work with.

Before dealing with them, you must understand what a NULL value is. It is not 0 or an empty text (‘ ‘) but an absence of any value. The absence of a value is typically a challenge to performing accurate data analysis.

You can’t use normal comparison operators like > < = to work with NULL values.

You can use IS NULL and IS NOT NULL operators to find values that are (and are not) NULL. Or use functions like ISNULL() to return a specific value instead of NULL.

Finally, it’s important to know how aggregate functions and NULL values work together. For example, how you can use the COUNT() aggregate function to find the number of NULL values.

COUNT(*) returns a number of values including NULL ones, whereas COUNT(column) returns the number of non-null values. The difference between them will be the number of NULL values in the table.

Text functions

In SQL, text values belong to data types such as VARCHAR, CHAR, or TEXT, and they are very common. It’s important for data engineers to be able to use built-in text functions in SQL.

CHAR_LENGTH() returns a number of symbols in the text. LOWER() and UPPER() convert text values to lowercase or uppercase. They help you ensure consistent capitalization of letters, which is absolutely necessary if the task involves case-sensitive functions.

Other important text functions are TRIM() and SUBSTRING(). The former allows you to remove unnecessary spaces before and after text values. The latter allows you to extract a specific portion of the string.

Finally, the REPLACE() function finds substring instances and replaces them with another text.

This was a short list of the most important text functions in PostgreSQL. Refer to the documentation for a full list. The more text functions you know, the better.

JOINs

Data engineers use JOINs to combine data from multiple tables into one. There are four main types of JOINs in SQL.

- INNER JOINs return rows with overlapping values in the shared dimension.

- LEFT JOINs return all rows from the first table but only overlapping values from the second table.

- RIGHT JOINs are the opposite and return all rows from the second table and only matching values from the first table.

- FULL JOINs return combined columns of both tables. If rows do not overlap, it fills absent columns with NULL.

- CROSS JOINs return all possible combinations of columns from both tables.

Understanding various types of JOINs will help you succeed as a data engineer. You should understand their syntax and be able to recognize which type of JOIN is needed for a given task. Knowing how to use the ON statement to define a shared dimension is also important.

Window functions

You can use window functions to create new values. For example, they can be used to assign each record a numerical rank. Or create new aggregate values of the entire table or certain group of rows.

Unlike the GROUP BY statement, aggregate values are stored in a separate column, not collapsed into one row.

Before going into an interview, practice important window functions like ROW_NUMBER(), RANK(), and DENSE(). Learn how to use PARTITION BY to create groups and apply window functions to each group, not the entire table.

In later parts of this article, we’ll see practical examples of window functions and how the aforementioned functions can solve real business challenges.

Array functions

As a data engineer, you’ll have to work with complex data structures like arrays. So it’s important to have a good grasp of array functions.

You can start with learning the ARRAY() command, which takes values and adds them to an array.

ARRAY_AGG() function aggregates all items of the array. ARRAY_APPEND() function allows you to add specific value to an array. Or join two arrays together using the ARRAY_CONCAT() function.

CASE expression

Setting up conditions for filtering and joining tables is an integral part of a data engineer’s job. CASE expression can be used to set up complex conditions for WHERE and HAVING clauses.

You should know how to use CASE expressions to chain multiple conditions. That includes WHEN, THEN, and ELSE clauses, which make up the condition.

It’s important to understand how SQL determines the outcome of a CASE expression. A chain of CASE statements will return the value of whichever condition is met first. If none of the conditions are met, it will return the value of the ELSE clause. If there is no ELSE clause, CASE will return NULL.

DISTINCT

Data engineers use the DISTINCT clause for many purposes. When used with a SELECT statement, it returns only unique values. When used with aggregate functions, it ensures that they are applied to unique values only.

DISTINCT provides a very simple yet effective way to remove (or ignore) duplicate values. For that reason, it is a useful tool at any data engineer’s disposal.

Basic Data Engineer SQL Interview Questions

Now, let’s see these SQL concepts used in practice.

Question #1: Cast Values to Integer

This is a typical data engineer SQL interview question, where you are asked to cast values, remove inconsistencies, and clean up the table.

Cast stars column values to integer and return with all other column values

Cast stars column values to integer and return with all other column values. Be aware that certain rows contain non integer values. You need to remove such rows. You are allowed to examine and explore the dataset before making a solution.

Link to the question: https://platform.stratascratch.com/coding/10056-cast-stars-column-values-to-integer-and-return-with-all-other-column-values

Understand the question

This data engineer SQL interview question is fairly straightforward as long as you take the time to understand what’s being asked.

There are two clear objectives: cast stars values to integer and clear the table of rows that don’t have numeric star values. Otherwise, leave the table as it is and return all other columns as well.

Analyze data

To answer this question, we have to work with a single yelp_reviews table. Let’s look at the types of values in all columns:

As you can see, the stars column stores text values of the ‘varchar’ type.

Business_name, review_id, user_id, review_date, and review_text similarly contain text values.

Funny, useful, and cool columns are integers.

All columns will stay the same except for the stars column. Values in this column will be converted to an integer. We must also remove rows where the stars column contains values other than numbers.

We can see that the stars column contains whole numbers to represent the yelp rating of each business. However, there are exceptions when the value is a question sign (‘?’).

It might look like values in the stars column are already numbers and don’t need to be converted. However, it’s important to understand that the ‘4’ you see in the column is actually text. Not a number 4, but a piece of text, like ‘4 apples’.

Plan your approach

To solve this data engineer SQL interview question, we need to perform two tasks - keep rows where the value of the stars column is a number (not the ‘?’), and convert values of the stars column to the integer type.

Finally, we need to return all columns. Only values in the stars column need to be converted to integers, others are returned as they were before.

Write the code

- Convert stars values

Let’s start by converting stars values from ‘varchar’ to ‘integer’ type.

Let’s select all other columns as well since our query needs to return them all.

In PostgreSQL, we can use the double-semicolon syntax to easily cast the value of the stars column.

SELECT business_name,

review_id,

user_id,

stars :: INTEGER,

review_date,

review_text,

funny,

useful,

cool

FROM yelp_reviewsIn MySQL, you can use either CAST() or CONVERT() functions instead.

- Remove rows with non-numeric star values

As we’ve seen in the previous section, some records have the stars value of ‘?’.

We can simply set a WHERE clause to only keep rows where the value of the stars column is not ‘?’.

SELECT

business_name,

review_id,

user_id,

stars :: INTEGER,

review_date,

review_text,

funny,

useful,

cool

FROM yelp_reviews

WHERE

stars <> '?'That’s it. This query will convert the stars column to integer, and filter out rows where the value of stars column is ‘?’.

Output

Output table should look exactly like before, but the value of the stars column will be of integer type. Also, records with the ‘?’ value in the stars column will be filtered out.

All required columns and the first 5 rows of the solution are shown

| business_name | review_id | user_id | stars | review_date | review_text | funny | useful | cool |

|---|---|---|---|---|---|---|---|---|

| AutohausAZ | C4TSIEcazRay0qIRPeMAFg | jlbPUcCRiXlMtarzi9sW5w | 5 | 2011-06-27 | Autohaus is my main source for parts for an old Mercedes that has been in the family since new that I am restoring. The old beater is truly a labor of | 1 | 2 | 1 |

| Citizen Public House | ZZ0paqUsSX-VJbfodTp1cQ | EeCWSGwMAPzwe_c1Aumd1w | 4 | 2013-03-18 | First time in PHX. Friend recommended. Friendly waitstaff. They were understaffed and extremely busy, but we never knew. The short ribs were tende | 0 | 0 | 0 |

| Otto Pizza & Pastry | pF6W5JOPBK6kOXTB58cYrw | JG1Gd2mN2Qk7UpCqAUI-BQ | 5 | 2013-03-14 | LOVE THIS PIZZA! This is now one of my favorite pizza places in phoenix area. My husband i walked into this cute family owned business and were greet | 0 | 0 | 0 |

| Giant Hamburgers | QBddRcflAcXwE2qhsLVv7w | T90ybanuLhAr0_s99GDeeg | 3 | 2009-03-27 | ok, so I tried this place out based on other reviews. I had the cheeseburger with everything, some fries, and a chocolate shake. The burger was okay, | 0 | 1 | 1 |

| Tammie Coe Cakes | Y8UMm_Ng9oEpJbIygoGbZQ | MWt24-6bfv_OHLKhwMQ0Tw | 3 | 2008-08-25 | Overrated. The treats are tasty but certainly not the best I have ever had. I would have rated this a two star but the cakes and cookies are REALLY pr | 1 | 3 | 2 |

| Marcellino Ristorante | GTUOIBCEGGt_aGp-bRogfg | gopGuEb-ft6cHKMyCZEvJg | 1 | 2010-12-17 | This place sucks. Food was average and we had to wait an hour even though we had a reservation. My americano tasted like warm soy sauce and they blame | 2 | 3 | 0 |

| Shanghai Club | wHfxd0Bq4JYLiUEO55xe4Q | eVQ_yDkMlF62oofUwc29Kw | 5 | 2013-09-19 | This is our favorite Chinese restaurant in the area! The service is always consistent and our favorite waitress - Becky - always makes time to spend w | 0 | 0 | 0 |

| Freddys Frozen Custard & Steakburgers | NfTR_B1yW1hPVEoXlSJV-w | YnHYlN1m7jDhAH9XgR4Dlg | 4 | 2013-01-24 | Love the tiny fries. | 1 | 0 | 0 |

| Chipotle Mexican Grill | k-Oo0Gs4AC04GJAecu_iWg | HjpzhIQFRQbFmc_7CtFDmg | 4 | 2011-05-11 | When you don't feel like a full restaurant and you want more than the normal Mexican fast food, Chipolte fits the bill. The food is always fresh, the | 0 | 0 | 0 |

| Arizona Fire & Water Restoration | uvVmBnYQf8Mnt-s64D8XOg | zQP7cLujr-MJ207uuNFC1w | 5 | 2011-08-03 | This is a fantastic company. They have a very high rating with BBB and have won a ton of customer service awards. Hopefully you dont need them but i | 0 | 0 | 0 |

| Arriba Mexican Grill | 0HOrc_RX87-01dbdFMSjJw | 8m8HQtZox4vS-N-AW3mzxw | 5 | 2013-12-31 | Open Christmas Day! Their food is delicious. Especially breakfast! Mmmm, the salsa mmmmm. Hatch chills, pork, chicken...pollo asada, carne asada...you | 1 | 1 | 0 |

| Renegade Tap & Kitchen | ZaCA3v9bWUpHuwZ6NO8C1Q | iVTzpbZ6qBdFllvcJLbmeg | 4 | 2011-02-26 | Dinner on a busy Friday night. Arrived on time for 7:30 reservation. Table not ready, restaurant and bar full. Host and hostess pleasant, but not o | 0 | 0 | 1 |

| Chipotle | _gcGIGfziNkhaIlkjhjKHg | 3uU_6L8GnFOHTsO4I3oedg | 3 | 2013-09-24 | I absolutely looove Chipotle. My problem is when you make me pay almost $2 extra for guacamole... please don't be stingy with it. Hook it up, Chipotl | 1 | 2 | 1 |

| SanTan Village | LXiDBkXxcyL4IPnXbjw0VQ | Ba-tIR3a8hhwIk-y_hVzFg | 4 | 2011-03-27 | Next month when the temps skyrocket to triple digits, i will prolly not think soo highly of this place, but for now, San Tan has gotten alot of my fre | 0 | 1 | 2 |

| Love's | Er4Y-yj1JBW9cCbIf3ViKg | bC3By-saT9ylKu-dwWgtcw | 4 | 2012-11-13 | Plenty of gas pumps and convenient to get some cooked food inside. If getting gas, caution for enter and exits at pumps. | 0 | 0 | 0 |

| Dirty Drummer | tMYUWXoFuLdFecqqP60R3A | X_kPh3nt0AJPNPHye2rTlA | 4 | 2011-06-18 | I was introduced to this place by my coworker and friend. She goes here every single day at lunch and its cute because everyone knows her there! Any | 1 | 3 | 2 |

| Euro Pizza Cafe | x2atXyt-QwCTzHhglzxj3Q | JKp42Y520azWI_WBzUMxTw | 5 | 2012-04-07 | This is a nice cafe with a diverse menu. There's indoor or outdoor dining with a view of the famous fountain! I ate here with my friend Connie who als | 0 | 0 | 1 |

| US Airways | o_YetnCcK_96ueIULO84fA | Vl4k0FiMNCRzEQwOpe4hXw | 2 | 2012-07-20 | Well, they have good flights and connections from time to time. Their fleet is very archaic though, probably most of their planes are older than me (a | 0 | 1 | 0 |

| Wal-Mart Neighborhood Market | c7JHcdWo5pZ3rMIDbsDt_g | IDHrwv_RCildFvmfWTkj5Q | 2 | 2011-10-02 | I occasionally stop in this store while driving past. I will try to keep my comments on the positive side. NOTE if you don't read anything else: if | 0 | 1 | 1 |

| Flancer's Cafe | 78XeKBmSE0reBjsmqg7HNg | AYGHNy8gPxl2Q-etTT3hZw | 3 | 2012-12-01 | This place has decent food, cute atmosphere, but the service is problematic. I was stuck in Mesa for training and had lunch there on Halloween. My pal | 5 | 7 | 5 |

| Pappadeaux Seafood Kitchen | y6q-inMFFoEci-wRATp1-A | S3bvMOL50vgS_8-TtlGi4w | 1 | 2010-12-02 | $20 for a double Maker's Mark? Me thinks not T.G.I. McPappadeaux. I was not impressed by anything that was there and will not only steer clear but m | 13 | 6 | 6 |

| Lone Star Steakhouse | fp73RBYM6NAnNWii9bxZ8w | 7Ot-v89x44U_VdIPgD3qKg | 4 | 2011-10-18 | Great food and a rare bird of a honest manager when it comes to whats in the food. They have very comfy booths. Did nor care for the country music. I | 0 | 0 | 0 |

| EVO | 3D3Avu2d8Gj-HEbqqWhswg | H982l-WK1p49z9jZFNMEfQ | 4 | 2013-12-29 | Great atmosphere and decor..a welcome change from the chains and anchor restaurants in the area that all feel the same. My wife could not stop raving | 0 | 0 | 0 |

| Conocido Park | 0ESAQ8Ynk1nZPt5LayMwng | 3OelvbzNK3KSmMdL0O9nRQ | 4 | 2013-10-08 | I play this disc golf course weekly. The baskets are frequently moved to keep the course fresh. Trees provide difficulty and also shade! There are Dis | 0 | 1 | 0 |

| Nate's Barber Shop | KEMsCW33Y1ZQEGTiMKmcrA | Hm_7pViZyrp_Z62lBRopAg | 5 | 2012-12-12 | Nates barber shop is one of the best shops that I have encountered in my life. If your looking for a great inexpensive ($13) good looking haircut this | 0 | 0 | 0 |

| S & S Tire and Automotive Service Center | 99_nV5h4JHomT7cgh0V6lg | hYKjQHu2fk4nMgCWO50f-w | 5 | 2011-02-25 | My partner and I needed two new tires and an alignment. We have a Chevy Cobalt and its only two years old. Went to the dealership and they wanted almo | 1 | 0 | 0 |

| La Parrilla Suiza | ldvKeuzBSIesZEmFcr4ooQ | EXvhtd_05d1H9RlXa2CnIQ | 5 | 2012-07-27 | This food is just....fantastic. I don't know what else to say. I grew up eating at La Parrilla Suiza in Tucson. The tortilla soup and "Queso Suiza" | 0 | 1 | 0 |

| Scratch Pastries & Bistro | znMtXO5hY5XPqAMj_7VLRg | b2DKC4kC8-QeSeGZ_MF3XQ | 5 | 2012-03-16 | Yes, it is in a strip mall. Don't let that fool you. This is some downright good food with affordable prices. Even though service was very attentive | 0 | 0 | 0 |

| Joyride Taco House | uyLuLYfjs3S_8u3OkrIdmw | mY6zzvFbK0ENnQOdgtiT4Q | 2 | 2013-10-19 | Great food! But not worth the HORRIBLE SERVICE! Took about 15 mins for drinks that included a dr pepper. The server said the bartender is working hard | 2 | 3 | 2 |

| In-N-Out Burger | nxoxgQka8mTK-rLCh7sg3w | OwVB3YzcYeTRV09tpNDBSA | 5 | 2011-07-08 | Nothing beats a #1 with grilled onions, no tomatoes, well done french fries and a pink lemonade. I've been known to eat here twice to three times a we | 0 | 0 | 0 |

| Roka Akor | fpjKqP8ONJ9rT82VoUhIQQ | 4ozupHULqGyO42s3zNUzOQ | 5 | 2011-07-18 | I hate to admit it, but it had been a long while since my last visit to Roka Akor. I deserve a hand slap. But last week, I had the perfect excuse to p | 5 | 8 | 10 |

| Ruth's Chris Steak House | z3pSiipCrQM3B6i9PrnoGw | hJBOxmNREXmMGTfXgMcGug | 5 | 2010-03-30 | Best steak I have ever eaten is at Ruth's Chris steakhouse. Comes to your table sizzling hot. Sides are sold individually but are pretty good. The des | 0 | 0 | 0 |

| Yupha's Thai Kitchen | arf6Ne6h0UDXizsbMcOomQ | AkJFqLqHHAKY3H5R8p7cPQ | 5 | 2012-12-09 | Yupha Thai is definitely a "yuppy" in terms of being a great spot to go to. There are not too many places to eat near ASU Research Park, which is rig | 0 | 0 | 0 |

| Hotel Indigo Scottsdale | VDtEMw1X397ViDlP7oErTw | 8Oy9-UwJQWffS0yOwPG6Ew | 4 | 2013-07-01 | I stayed here for one night while on a recent business trip! I wish I had discovered this place sooner.... I was pleasantly surprised! I never heard | 0 | 0 | 0 |

| Da Vˆng | xbVGTBSsXmvu56FTbXp7Aw | F6mQhKLdj_PEdxLvDYOm2Q | 5 | 2011-12-15 | I haven't had better pho anywhere in az. the large pho is enormous for the price. i usually go with my family and order a regular sized pho for myself | 0 | 0 | 0 |

| Trader Joe's | HzI7nVlXJQJR3GO1KXYxlA | cMmQsFyrYBv6hIE6NffqZQ | 5 | 2011-01-03 | Trader Joe's always goes above and beyond in all of their services. The food is fresh and delicious, the prices reasonable and the people are great. | 0 | 0 | 0 |

| Beaver Choice | 18fIpXUbcm9k6Pmtkbf0aA | 3gIfcQq5KxAegwCPXc83cQ | 4 | 2011-04-21 | So I went here tonight with a friend. I was really excited and nervous to try this place after reading the reviews. So you walk in and walk up to the | 1 | 1 | 0 |

| Some Burros | Oqogqje3RKspPwVcREfsXA | GnqNc74So5Pc8C3hkA2hCg | 5 | 2009-07-10 | Came here w/ the hubster's. I've actually been craving this for some time now. The pollo fundido is great! So goooooood! Its a pretty big portion. The | 0 | 2 | 1 |

| Superstition Ranch Market | B1xnRb2j_iW2Ws0u1B0FNw | EOLRikjQxTIpXB4aV1hbPQ | 5 | 2012-01-05 | Great place to shop, buy what you will use within a few days. | 0 | 0 | 0 |

| Fuego Tacos | J6nrjjCjXc-hnRpZZPrLnQ | A99dyhEqcd_yXKPfBWeZHA | 3 | 2011-08-18 | I went to a late lunch on a Saturday and the Esplanade area was quite dead (I'm surprised because in the old days ('98-'99), I remember it to be prett | 0 | 0 | 0 |

| The Woodshed | #NAME? | lsp7p2NuC5MX4_iuch3_OA | 1 | 2012-06-17 | We only went here because it has been a traditional Father's Day event with friends. We decided to join them for the 1st time......really...we will n | 0 | 1 | 0 |

| Rosati's Pizza | Ld4Qg2Du0S3ulcdDCdm7Jg | SEDJTWEzMdqp7UsS1W3KXw | 3 | 2012-10-24 | First of all, let me just say their food is fantastic. I love their pizza. I love their salads. I love the cheesy garlic bread and their chicken parm. | 1 | 0 | 0 |

| Sekai Sushi | 8TB8vM1H_SuEK2hS-5wu7g | TDlgqAxf268QOw-OUk2Urw | 2 | 2012-05-02 | I am sorry to say people of Mesa you must have pretty low standards if this is the best sushi bar in Mesa. I went there last night and I really did no | 0 | 2 | 0 |

| D'Vine Bistro & Wine Bar | JvHH1Z84UJ1P5T9uIxEnyQ | rT4ycOjlrKefSAcjoQga5g | 4 | 2012-04-12 | Love the atmosphere and fantastic happy hour! Favorite spot in Mesa:) | 0 | 1 | 0 |

| Scottsdale Stadium | eAYq_HT_gbD_ECgIWn3GoA | Mt3dPqOlnlGyVCftCcokmg | 4 | 2012-03-27 | Ignoring the fact that Scottsdale Stadium is a bit overpriced these days for Spring Training Giants tickets, its fun. Its basically one big party (in | 0 | 3 | 0 |

| Thai House | #NAME? | fczQCSmaWF78toLEmb0Zsw | 4 | 2008-07-21 | Damn... Helen Y beat me to the punch and got the FTR for this place! Oh well, I will say that she did a great job with her review - I think I was Tha | 3 | 8 | 7 |

| Jalape–o Inferno Bistro Mexicano | VRiSQiIfUnZdp0CxNMkLWg | 4nJ5ryQTcQKs8mCrgt8-BQ | 2 | 2011-04-05 | The dinner we had here was OK... nothing to rave about. The tortilla chips were very good as others have mentioned... a mix of corn and deep fried flo | 0 | 0 | 0 |

| Caffe Boa | hi6a3fvAbtZq9jMIM8gkwQ | qa05pUVNapADHZXpHMPMeA | 3 | 2010-06-26 | Caffe Boa is an interesting jokester, so, it is interestingly hard to review it. I'll keep it short. I have gone here several times, and each time wit | 0 | 0 | 0 |

| Golden Valley | ZYEAmRpYHxJYcbIv-c7S2w | gg_OKjOAl_vVmdh5ZETuiw | 3 | 2012-02-25 | Can I tell the difference between Uzbek cuisine and other Middle Eastern or Mediterranean cuisine? Nope. For all I know it's only a matter of where | 0 | 0 | 0 |

| Canyon Cafe | gi9hLYOPk_fbvOr2mCHu7g | nH9OZEGfgseWjC5_IPGCXw | 5 | 2010-08-09 | Everything about this place is wonderful!! I love the huge windows and outdoor patio! Gorgeous! Their food is amazing everything from the chips (there | 0 | 0 | 1 |

| Panda Express | r52OE-CfRoJQyjBtn0vHIQ | fMyKbyYY9Poy9B_1QZPKcg | 1 | 2010-05-31 | My young children love Panda Express orange chicken. They eat it 2-4 times a month. We were at the mall on a Saturday afternoon and stopped into the f | 0 | 0 | 0 |

| Gordon Biersch Brewery Restaurant | pfPFWY5SXQEEnlVJbFaNqA | nKaR5Z9Qmqc4RsakLLX_7w | 3 | 2010-03-29 | It's very hard for me to enjoy a house brewed schwarzbier that, to me, tastes more like Bud Light with a hint of acrid smoke flavor than what I consid | 2 | 4 | 3 |

| Chili's Grill & Bar | 42EOZ0KMF4wU1Sz7oeLfpA | AfyzIHPy5zds_mqf2Jdc9g | 3 | 2011-02-07 | I love Chili's. My husband and I always go for the 2 for $20 deal at whatever Chili's location we may be, but this location seemed to skimp out on the | 0 | 0 | 0 |

| Beckett's Table | lNLiQx1zi-ctta6v4LLhXw | GJwbccjXgoRPbNuWcNKYXA | 5 | 2012-01-29 | Beckett's Table is a fantastic restaurant for people who want great food, great wine, and great service. My wife and I dined there with a couple of fr | 0 | 0 | 0 |

| My Big Fat Greek Restaurant | IuSys52QuyTxGv3HLFKBSw | 1gY1N3pkxTzh7kK4BxANyw | 4 | 2011-05-07 | I hated Greek food, until I tried this place. My "health nut" girlfriend had to drag me to this place, kicking and screaming. After all, my long ago | 2 | 3 | 3 |

| Goldmans Deli | 6DggWM9rgzC_mIo4THFpMA | PKZvqm3IeWiWBYoDDoEG4w | 5 | 2012-09-02 | Traveled in from the east coast so I got to Arizona very early in the day. I needed to kill some time before I could check into my hotel room so I en | 0 | 0 | 0 |

| Macayo's Depot Cantina | 98nvcyGhtHlKO8pDlOcCsA | bZFRqP7s0Vszxeu8_IwYow | 3 | 2008-03-21 | I finally ate lunch here after not being able to get decent parking for Quiznos on Mill Ave. My coworker and I were starving and needed a place to sh | 0 | 2 | 3 |

| Rayner's Chocolate & Coffee Shop | GCdNDjutQWsT-qaYwW0zxw | M28A6JPQFBJnRBCfODe8IA | 4 | 2013-05-15 | Cute bakery/coffee shop hidden in a little plaza on 51st Ave off of Thunderbird Rd. Nice selection of unique baked goods, chocolates and coffee drinks | 0 | 1 | 0 |

| Sushi Brokers | up3ueFZ1xJh_ts6dVu3_0A | hDlSSyDreM9xY4yQWPm54w | 2 | 2009-02-26 | --expensive for business lunch --servers very attentive, prompt --lacks nth-degree detail of a Japanese chef running things; rolls and standards are s | 4 | 3 | 3 |

| The Vig Uptown | rib7dXO863eL5VGUDsot8g | uQCk37gNl1bEmkjAv6_kAw | 4 | 2011-04-17 | My meal was great; the decor / layout is great also, with a lovely patio out back. The only downsides are parking, and the fact that the entrance is a | 0 | 1 | 0 |

| Lo-Lo's Chicken & Waffles | IlFoK4meMZ7Ws4enESzeTQ | EacK6XwZjsTD6QYSIRlJ7Q | 5 | 2008-07-14 | At first most of my noobie friends are very skeptical about the fusion of Chicken and Waffles. but after taking them to this place and experiencing f | 2 | 3 | 2 |

| Shoe Carnival | YhlJA_CuoZlK4FIJUHlCnw | _PzSNcfrCjeBxSLXRoMmgQ | 2 | 2010-05-17 | I had a $5 coupon in the mail so I was like what the heck. And is it next to Home Goods (one of my favie home decoration stores). I got to the store i | 2 | 1 | 0 |

| My Big Fat Greek Restaurant | Qe0FO565tGfTxb7QtNCVwg | 2vl3MXKr8iQOWTNse5kgdw | 3 | 2008-04-03 | Nice menu selection; food was tasty. Good atmosphere. Wished they had a restaurant in the LA area. | 0 | 1 | 0 |

| Casey Moore's Oyster House | R7ZJPW4qEXuqI41aaWmO0A | rLtl8ZkDX5vH5nAx9C3q5Q | 4 | 2009-04-02 | This is a fun place for appetizers and drinks if it is not too crowded and the temperature is just right outside. Otherwise the inside gets way too p | 1 | 2 | 1 |

| Q to U BBQ | IJxqQwzJjAURPBAB_-iOAA | MSgZpSWlf8T2H_46OWNgCQ | 5 | 2011-09-08 | Really enjoyed the Ribs and fries, I came with friends that really like BBQ, they agree, the ribs were terrific! I am sure we will be going back to Q | 1 | 1 | 1 |

| Lightning Lube | kezCWAz6MO1wKwXB_DK-3Q | bwmXfjwrogAaGqV33kSVpQ | 3 | 2013-08-24 | $115 for an oil change and two air filters for my civic. I must've had a really long day or she had magical powers and made me forget I could simply c | 0 | 1 | 1 |

| Athens Gyros | aAgVzZU2b0YbYGi4byeI6w | L8_GwFxxtGSYR2F_dglpSg | 4 | 2013-08-17 | Great food nice service. The girl that worked up front introduced herself to the other patrons that were there and asked them how the food was but no | 0 | 0 | 0 |

| Metro Light Rail | lG8Swugg_DQxY3NgT_BEig | LqgGgWi3FLHBViX9tmZ9sw | 3 | 2011-10-31 | I just wish that this stupid HUGE metropolis could have more LIGHT RAIL connections!! compared to the circus you have to stand in the buses, the LR is | 1 | 1 | 1 |

| Oregano's Pizza Bistro | 7QvgM_LJi6SRp_GuOXPFZQ | for16MiFS1M_8_cne6IbIw | 4 | 2013-02-04 | Big Delicious portions! | 0 | 0 | 0 |

| Gallo Blanco | gULD5qz_CQI9clPWh2FNHA | Z02XdD0muEz2FFQKPERMYQ | 5 | 2013-12-29 | Stopped in here one night right before Christmas. Short and sweet: Margaritas - very good (and huge by the way) Tacos - awesome Guacamole - exce | 2 | 2 | 2 |

| Los Dos Molinos | JcWhDcyNl3r_Tbeqiac15Q | GoymUzKqvET2QOZkIWZi9w | 4 | 2014-01-07 | We have a friend who said the salsa is way too hot. All I heard was "you must try Los Dos Molinos". We love spicy food and are always up to a challeng | 0 | 1 | 1 |

| Nancy's Nail Salon | ZGo8c57MrzQrSN6R7zO1uQ | A9g7YnTtsSV-wEIo3HI1YQ | 4 | 2011-06-09 | Came here with my sister in law she had a coupon for a 27.99 mani/ spa pedi. The staff was friendly they have a tv and plenty of magazines to look thr | 0 | 1 | 0 |

| Changing Hands Bookstore | 1xzMe1EEwhF23RNh3InKkQ | fPHLPrymsyb6WSFFKoMrTQ | 5 | 2010-10-26 | This not-so-little bookstore has it all... new and used books, a unique gift section, book signings and events, wonderful staff and a cool, organized, | 0 | 0 | 1 |

| Tortilla Fish | OmSYYxZskG9BeRMwb5Dltw | MxO7EY766jVoFEZzkpwmOQ | 2 | 2013-10-06 | My experience wasn't bad, just not up to the hype of all the other reviews. I tried the shrimp, fish, campechana and machaca tacos. The shrimp tacos w | 0 | 0 | 0 |

| Pet Club | 0LvO1yc-52fJ6vIHaFVdAw | LXOhR4ZUULSbBNztxYZ2dQ | 2 | 2013-07-16 | That awkward moment when local competitors come write negative reviews about a store and then direct traffic to their own store.... | 0 | 1 | 1 |

| Taste of Tops | J2lGBvJOcuhmauWs3rgMSg | aIAjAU-6NH583EkQ6E9KRw | 4 | 2009-10-09 | Okay, in interest of full disclosure, I literally live around the corner and across the street from Tops Liqour and have been waiting forever for this | 1 | 1 | 1 |

| Carolina's Mexican Food | N6eg6Jc_mL_XHMGmw6GElw | cbxUyCUMjkWAs1h4auYeAw | 4 | 2012-01-31 | If you're looking for real Mexican in a hurry this is your place. This is one of places ill always bring out of town guests who can't find good Mexica | 0 | 0 | 1 |

| Super L Ranch Market | cK3J7FAqruLZM_Y5J29Q8Q | z06IHGXI_ofBc2DkAbCgnA | 5 | 2011-05-09 | HOLY CRAP THEY HAVE FROZEN XIAOLONGBAO. :) These delicious little bites of porky, soupy dumpling heaven have eluded me since I first tasted them in | 2 | 2 | 1 |

| Salt Cellar | IXGX_Lk2NgCH-0OQNcGMpQ | E4HbTIHd9PVjUnEKpysaLw | 5 | 2011-10-04 | My experience here was absolutely amazing. My boyfriend and I had reservations for 7:30 pm on a Saturday night and the service was amazing and the fo | 0 | 2 | 1 |

| Rosita's Place | f_yQqlsim0S9YAIIYFvR5Q | 7nlZJW84Adt6oYn2shnn_g | 3 | 2013-05-30 | The food here is delicios, but it takes forever. From the time I ordered to when I was served 25minutes. Come only if you have time to spare to sit an | 0 | 0 | 0 |

| Matador Restaurant | x927gFqVNPSOPwNrKqPmmQ | nyHh14Vb9S269-kGKaUelg | 4 | 2011-08-09 | I've eaten at Matador almost once a month for about15 years. I won't get into the logistics of others reviews. I give the higher than average star rat | 0 | 0 | 1 |

| Hanny's | riZp_RIN28ld-U2Q5dhKhA | GRgBu4K7GOb3354esp_xkg | 4 | 2010-06-08 | Food = 4 stars Place = 5 stars Service = 4 stars I REALLY like this place. It looks super classy inside and the deco on both floors is a | 3 | 3 | 3 |

| Crust Pizza and Wine Cafe | rTc3d_GYXyHuf_tQoED80g | AOmdmYYSeLUstcN084_wMA | 1 | 2012-10-16 | I told my friend that I'd rather eat out of a vending machine, and he said.. "Yelp THAT!" I had the calamari app, the caprese salad and the eggplant | 2 | 1 | 0 |

| Ocean Air | 9gLTx4HjE-NeSa3KTfGJJQ | K0U0Hp6rgXHrYCG4jpPT8w | 5 | 2013-09-01 | Thank you! Ocean Air came highly recommended and now I know why!! Excellent, fast, friendly service!!! Reasonably price AC maintenance and FAST respon | 0 | 0 | 0 |

| Sleepy Dog Brewpub | 9gtyU7vjWUjddmFrT97sww | Fm0EXFwIfDQoIm9RgcAOKQ | 3 | 2013-04-06 | As a number of others have said, the beer is good with a good number of choices. The food is pretty good too. The biggest issue has been service. The | 0 | 0 | 0 |

| unPhogettable | yVK0x3_-o16ufBbIyqGJRw | sWh4Tjwa8ch_rziHtTN9LA | 5 | 2013-08-27 | Always amazing service! Always amazing pho! Add veggies to a meat dish! The spring rolls A1 & A6 are the best in town!!! We are now here on a weekly b | 1 | 1 | 1 |

| Paradise Valley Burger Company | bkZ67PfRlKLKl4x5mIFYSg | ff00OcqImnNYy-OvSgUZyw | 5 | 2013-11-04 | Best burgers in town! They don't skimp on quality or ingenuity. | 0 | 0 | 0 |

| Hanny's | 4n_3G2Xux0stcgOUsrzYaw | ev7D2jo5OUDeHf0dWoWlsQ | 2 | 2012-06-25 | Super disappointing, they won't take reservations and I wanted to make a reservation for 20 persons. I've been here many many times and love the food | 0 | 0 | 2 |

| Golden Panda | ikNpO72tj7uI5VTatHpoAA | 80OFMLRA0yW3sE4ciYg_vA | 1 | 2009-10-10 | Why oh why is it so hard to find good Chinese food in this town? The two behind the counter at this place are Chinese - please don't tell me you eat | 0 | 0 | 0 |

| Breakfast Club | U_oJEB166nCeBNY-wqadxw | AMYi-53cxstrCR5wqyY1KA | 5 | 2011-01-11 | O-M-Goodness! What luck to have eaten here for breakfast!! Huge portions served with fresh fruit slices or mixed berries. Great service and very nice | 0 | 0 | 0 |

| Ulta Salon Cosmetics & Fragrance | 9e3MOWg4zrq_NOqKP3fMcQ | F6QsMoJdvtohlbnST-fDyQ | 4 | 2011-03-14 | I really like this store and have been going here for as long as they have been open. 18 or 19 years. It is always exciting to be rewarded for the pro | 1 | 1 | 0 |

| Essence Bakery CafŽ | NkekoPY-4txUxkyoN_Tu4w | DrWLhrK8WMZf7Jb-Oqc7ww | 5 | 2012-09-14 | Ok, I'm not sure I ever had this pastry combination, but it was clearly a great item. It was the Chocolate almond croissant. Usually those two varieti | 0 | 1 | 0 |

| zpizza | UJEPSoO6yNnR8kdneDy0rg | fSi-yrKtBD58h2vPxjNE1A | 4 | 2010-12-01 | We ordered 4 rusticas for delivery using their buy-one-get-one promo online. They had a great deal on rustica pizzas, but somewhere in the fine print | 2 | 1 | 1 |

| Kona Grill | osYRF4FQe4cziGIXz33eQQ | gITFg65GtRDUb-0n460vNg | 4 | 2011-03-17 | I've eaten at Kona Grill twice in two days while doing business in the area. I had the Kona Burger and the Pepperoni Pizza. The burger was fantastic | 0 | 0 | 0 |

| White House | xn2LkVHBuRZ_jAg-LIiQ4Q | wFweIWhv2fREZV_dYkz_1g | 4 | 2011-07-25 | It's been a while since I took part in the nightlife in Scottsdale, it's not my scene but I was invited to White House for a party so you know how tha | 3 | 5 | 4 |

| Crowne Plaza Resort Hotel San Marcos Golf Resort | bMKW11Cf1Zeu1zWkzDbtrQ | Kt9NwDONle_mc0QHTud9jw | 1 | 2011-07-18 | Rating the golf course, horrible!! Thank goodness there was no one in front of us and we zipped around the course. They clearly stopped maintaining | 0 | 0 | 0 |

| Hon Machi | mu8Gst6LkzG5ahmolCH55g | KucBnMrhalzxnD9AWrxwYQ | 5 | 2011-06-21 | Great place for sushi and tepan - period. Not the "high-end" places but a rock solid place with lots of variety and good prices. | 0 | 1 | 0 |

| Bourbon Steak a Michael Mina Restaurant | AA6QQUFGWWkZlbpat46OfQ | cEIeuU0-4fX0Y4qCUW3PwQ | 2 | 2008-12-01 | My husband, some friends and I went to this place for restaurant week. We all sampled different dishes to experience a range of items - the multi-flav | 0 | 1 | 2 |

| Tempe's Front Porch | J71o5dOSoxoOhcR8NEo4Og | R4Ax3btoJ6qLXhqq6J50VQ | 4 | 2014-01-06 | This is the front porch of Monti's, my boyfriend and I were pretty confused looking for it. It's outdoors with a bunch of heating lamps, be sure to s | 1 | 2 | 1 |

| Joyride Taco House | pKe_ORPqaW0vfGyFkbxdHw | xv9nUSKR5RqnkgD0tufTfA | 4 | 2013-12-02 | Tried this place tonight with my boo and I am definitely a fan. He loved his carne asada burrito and my enchiladas were super tasty. They are a littl | 0 | 0 | 0 |

| Lunardis | fpjKqP8ONJ9rT8209thIQQ | 9itypHULqGyO42s3zNUzOQ | 5 | 2018-06-11 | This is the nicest grocery store in the city. I actually met my wife at this grocery store while shopping for avocados. | 6 | 7 | 10 |

Question 2: Arrange worker records based on values in two columns

In this question, aspiring data engineers are asked to arrange names in an alphabetic order.

Sort Workers in Ascending Order by First Name and Descending Order by Department

Sort workers in ascending order by the first name and then in descending order by department name.

Link to the question: https://platform.stratascratch.com/coding/9836-sort-workers-in-ascending-order-by-the-first-name-and-in-descending-order-by-department-name

Understand the question

The premise of this data engineer SQL interview question is fairly simple. We need to arrange records based on names (text values) in two columns.

The question only tells us to sort records in ascending and descending orders. However, since values are text, it means we need to order rows alphabetically.

There’s a twist to this question - for one column (first names), we must order in ascending order (from A to Z). For the second column (department names), we must order them in descending order (from Z to A).

Analyze data

All the necessary information is contained in a single workers table. Let’s start by looking at the types of values in each column.

We have six columns.

- worker_id contains integer values to identify workers. Most likely, we won’t have to work with this column.

- The first_name column contains first name values. We will order records by looking at values in this column.

- We don’t need to work with values in the last_name, salary, or joining_date columns.

- Department names are stored in the department column. We will order records by looking at values in this column.

Looking at the table, all values look standard. It’s worth noting that first and last names are capitalized.

Plan your approach

This question asks us to order rows based on values in two columns - first_name and department. We don’t have to change rows or values in them.

We need to select all rows and use the ORDER BY statement to arrange them in a specific order.

It’s important that you know how to order rows according to values in two columns.

Write the code

Try to write the code yourself.

Output

The correct query should return a sorted table.

All required columns and the first 5 rows of the solution are shown

| worker_id | first_name | last_name | salary | joining_date | department |

|---|---|---|---|---|---|

| 9 | Agepi | Argon | 90000 | 2015-04-10 | Admin |

| 4 | Amitah | Singh | 500000 | 2014-02-20 | Admin |

| 8 | Geetika | Chauhan | 90000 | 2014-04-11 | Admin |

| 12 | Jai | Patel | 85000 | 2014-03-21 | HR |

| 13 | Jura | Jomun | 980000 | 2013-05-20 | HR |

| 10 | Moe | Acharya | 65000 | 2015-04-11 | HR |

| 1 | Monika | Arora | 100000 | 2014-02-20 | HR |

| 11 | Nayah | Laghari | 75000 | 2014-03-20 | Account |

| 2 | Niharika | Verma | 80000 | 2014-06-11 | Admin |

| 7 | Satish | Kumar | 75000 | 2014-01-20 | Account |

| 6 | Vipul | Diwan | 200000 | 2014-06-11 | Account |

| 3 | Vishal | Singhal | 300000 | 2014-02-20 | HR |

| 5 | Vivek | Bhati | 500000 | 2014-06-11 | Admin |

Question 3: Find the last five records of the dataset

In this data engineer SQL interview question, data engineers are asked to select a specific part of a large dataset.

Last Five Records of Dataset

Find the last five records of the dataset.

Link to the question: https://platform.stratascratch.com/coding/9864-find-the-last-five-records-of-the-dataset

Understand the question

Description of this question is fairly simple.

Before attempting to answer the question, it’s a good idea to look at available data and examine values, their types, and the ordering of records.

Analyze data

There is one available table named worker. We already worked with this table in the previous question.

In this case, we are not concerned with any of the six columns or values contained in them. We simply have to find and return the last five records.

However, it’s important to look at the actual table (with data) to see if we can notice anything that will help us find the last five records.

Without further ado, let’s look at the worker table with actual data:

As you can see, every record has its own unique worker_id value.

It’s safe to assume that records are arranged in ascending order according to worker_id values. Double-check with the interviewer to confirm this pattern. Once you do, solving this data engineer SQL interview question becomes much easier.

Plan your approach

Before we can find and extract the last five records, we need to define the criteria for identifying them.

We’ve seen that each record has its own unique identifier of integer type. As long as these numbers are unique and increasing, counting the number of all rows will give us the id of the last record.

We can use that information to get the worker_id value of the last five records and set up the condition only to keep rows with those ids.

Or we can find the worker_id of the fifth row before the last and keep records where worker_id is higher than the threshold.

Write the code

Practice your SQL skills by answering the question yourself.

Output

Your final query should return the last five records.

All required columns and the first 5 rows of the solution are shown

| worker_id | first_name | last_name | salary | joining_date | department |

|---|---|---|---|---|---|

| 9 | Agepi | Argon | 90000 | 2015-04-10 | Admin |

| 10 | Moe | Acharya | 65000 | 2015-04-11 | HR |

| 11 | Nayah | Laghari | 75000 | 2014-03-20 | Account |

| 12 | Jai | Patel | 85000 | 2014-03-21 | HR |

| 13 | Jura | Jomun | 980000 | 2013-05-20 | HR |

Question 4: Primary Key Violation

In this data engineer SQL interview question, you have to find duplicate cust_id values and return the number of times they are repeated. You can find other Amazon Data Engineer Interview Questions on the StrataScratch blog.

Primary Key Violation

Last Updated: May 2022

Write a query to return all Customers (cust_id) who are violating primary key constraints in the Customer Dimension (dim_customer) i.e. those Customers who are present more than once in the Customer Dimension. For example if cust_id 'C123' is present thrice then the query should return two columns, value in first should be 'C123', while value in second should be 3

Link to the question: https://platform.stratascratch.com/coding/2107-primary-key-violation

Understand the question

The question has detailed instructions, but it can be confusing. Make sure to read it multiple times to get to the essence of the question.

To solve this challenge, you must know that primary keys are unique identifiers for each record. They can not be repeated. The question refers to this as the ‘primary key constraint’.

There are also instructions for how to format the output. We need to return rows with the duplicate cust_id values and the number of times they appear.

Analyze data

Looking at the available data could help you better understand the question.

Let’s start by looking at the types of values in every column of the only available table named dim_customer:

To solve this data engineer SQL interview question, we’ll have to find duplicate cust_id values and the number of times they are repeated. This column contains text values, specifically of the ‘varchar’ type.

cust_name, cust_city, cust_dob and cust_pin_code values are not important.

Plan your approach

In simple words, we need to find duplicate cust_id values and the number of times they are repeated.

The simplest way is to create a group of rows for each unique cust_id value, find the number of rows in each group, and return those with more than one row in the group.

Then return the number of records in each group. This will return the number of rows with the same cust_id value.

Write the code

Now that you have a logical outline of what to do, try to match it by writing the SQL query yourself.

Output

Query should return two columns - the key and the number of times it was repeated.

All required columns and the first 5 rows of the solution are shown

| cust_id | n_occurences |

|---|---|

| C276 | 2 |

| C281 | 2 |

| C274 | 3 |

Advanced data engineer SQL interview questions

Let’s also look at some of the more difficult SQL questions asked during data engineer interviews.

Question 5: Verify the first 4 numbers of all phone numbers

In this question, we have to validate data by checking phone number values and confirm that they begin with specific 4 numbers.

Verify that the first 4 digits are equal to 1415 for all phone numbers

Verify that the first 4 digits are equal to 1415 for all phone numbers. Output the number of businesses with a phone number that does not start with 1415.

Link to the question: https://platform.stratascratch.com/coding/9737-verify-that-the-first-4-digits-are-equal-to-1415-for-all-phone-numbers

Understand the question

This data engineer SQL interview question asks us to verify that all phone numbers begin with 1415. It’s safe to assume that one of the columns contains phone number values. We will have to make sure that the first four symbols of values in this column are 1415.

It’s unclear what kind of answer we need to return. It could be binary - to show whether or not all phone numbers begin with 1415. Or we might have to return the number of phone numbers that begin with 1415 or do not begin with 1415.

In situations like this, you should ask the interviewer for specific instructions.

Analyze data

The sf_restaurant_health_violations table contains information about businesses, including their phone numbers.

Let’s take a closer look at the types of values contained in each column.

The table has a lot of columns.

The only column that contains a phone number is business_phone_number, so it’s safe to assume that we need to check values in this column.

We can confirm our assumption by looking at the actual table above.

It's important to note that not all rows have values in this column. Some are empty.

Plan your approach

To solve this question, we have to filter rows by two criteria - the value in the business_phone_number column is not empty. If it has a number, it must start with 1415.

Let’s assume that the question asks us to return the number of rows where the phone number does not start with 1415.

Finally, we should return the number of rows that satisfy both criteria.

Write the code

1. Get the number of all rows

We can use the COUNT() aggregate function to get the number of all records in the table.

SELECT COUNT (*)

FROM sf_restaurant_health_violationsCOUNT() will return the total number of records in the table. Output will be collapsed into one row.

2. Set up two conditions

To get the answer, we need to find the number of records that satisfy the two aforementioned criteria.

We can add a WHERE clause to remove records that do not meet the criteria.

SELECT COUNT (*)

FROM sf_restaurant_health_violations

WHERE business_phone_number IS NOT NULL

AND LEFT(business_phone_number :: TEXT, 4) <> '1415'The COUNT(*) aggregate function will apply to filtered records.

We use the LEFT() function to take four symbols from the left side (beginning) of the value, and compare it with 1415.

Output

Our query will return the number of phone number values that exist but do not start with 1415.

All required columns and the first 5 rows of the solution are shown

| count |

|---|

| 1 |

Question 6: SMS Confirmations From Users

In this data engineer SQL interview question, data engineers must identify and get the number of invalid records. It is one of many Facebook Data Engineer Interview Questions.

SMS Confirmations From Users

Last Updated: November 2020

Meta/Facebook sends SMS texts when users attempt 2FA (two-factor authentication) to log in. The fb_sms_sends table logs all SMS texts sent by the system.

However, due to an ETL issue, this table contains some invalid entries, specifically, rows where type = 'confirmation' or other unrelated message types (like friend requests). These records should be ignored.

Only rows with type = 'message' represent actual 2FA texts that were sent to users.

Use the fb_confirmers table to identify which of these messages were successfully confirmed by users.

Calculate the percentage of confirmed SMS 2FA messages (where type = 'message') sent on August 4, 2020.

Link to the question: https://platform.stratascratch.com/coding/10291-sms-confirmations-from-users

Understand the question

Read the description of this question multiple times because it’s a lot to take in at once.

In short, a table contains records that should not be there. Specifically, confirmation and friend request records. It’s our job to identify misplaced records.

Finally, we are asked to find what percentage of confirmation texts were valid on August 4.

Analyze data

You’ll have to work with two tables to find the answer.

One is the fb_sms_sends table. The question tells us that some of the records in this table are invalid.

The other fb_confirmers table contains correct confirmation records.

We need to compare these two tables to calculate the percentage of confirmed texts for a specific date.

Let’s look at columns in each of these tables:

the ds column contains datetime values, most likely the date of sending a message. We will need to check this column to find sms confirmations for the 4th of August.

The question does not ask us anything about the location or network of the user, so we can ignore the country and carrier columns.

Values in the type column might help us identify whether the record represents a valid message, or false records.

The phone_number contains a phone number that receives the confirmation message.

Let’s move on to the next table, which contains correct records of confirmation messages.

Here we have just two columns, values in the date column indicate when the message was sent. Phone numbers are stored in the column of the same name.

Some of the confirmation records in fb_sms_sends table represent an unsuccessful attempt. We need to check them against information in the fb_confirmers table, which contains records of successful confirmations.

Plan your approach

We need some way to identify ‘confirmation’ and ‘friend request’ records that should not be in the fb_sms_sends table. We can do that by merging two tables on shared dimensions of the phone number and the date. If records from the ‘unverified’ table are not in the valid fb_confirmers table, it means they are invalid.

We will also have to set up a condition to only keep rows for messages that were sent on the 4th of August 2020 and remove messages sent on all other days.

Finally, we need to calculate what percentage of all messages were confirmed. Once we merge the table, we can get the number of records from the fb_confirmers table that are also present in the fb_sms_sends table. Then find the number of all records of the ‘message’ type and calculate the percentage.

Write the code

1. Perform a LEFT JOIN to filter out invalid records

To compare valid and invalid confirmation records, we need to combine data from two tables. The safest bet is to perform a LEFT JOIN, which will keep all records from the fb_sms_sends (including invalid records) and only match values from the fb_confirmers table.

SELECT *

FROM fb_sms_sends a

LEFT JOIN fb_confirmers b ON a.ds = b.date

AND a.phone_number = b.phone_numberWe will use the ON clause to define two shared dimensions - the date when the message was sent and the phone number.

In the combined table, records from the fb_sms_sends table that don’t share the date and phone_number values will have empty columns. This will help us identify messages that were not confirmed.

All required columns and the first 5 rows of the solution are shown

| ds | country | carrier | phone_number | type | date | phone_number |

|---|---|---|---|---|---|---|

| 2020-08-05 | PK | at&t | 9812768962 | friend_request | 2020-08-05 | 9812768962 |

| 2020-08-06 | LT | sprint | 9812768973 | friend_request | 2020-08-06 | 9812768973 |

| 2020-08-06 | VC | at&t | 9812768976 | friend_request | 2020-08-06 | 9812768976 |

| 2020-08-04 | SZ | rogers | 9812768988 | friend_request | 2020-08-04 | 9812768988 |

| 2020-08-04 | IT | at&t | 9812768991 | message | 2020-08-04 | 9812768991 |

2. Filter records by send date and their type

The question specifically tells us that we need to work with messages sent on the 4th of August in 2020. Looking at the table, we can see that dates are written in the ‘MM-DD-YYYY’ format.

We need to chain another condition to specifically select records of the ‘message’ type.

SELECT *

FROM fb_sms_sends a

LEFT JOIN fb_confirmers b ON a.ds = b.date

AND a.phone_number = b.phone_number

WHERE a.ds = '08-04-2020'

AND a.type = 'message'Running this query will only keep message records where the value of ds column is ‘08-04-2020’ and has a type of ‘message’.

All required columns and the first 5 rows of the solution are shown

| ds | country | carrier | phone_number | type | date | phone_number |

|---|---|---|---|---|---|---|

| 2020-08-04 | SA | at&t | 9812768913 | message | ||

| 2020-08-04 | HN | at&t | 9812768921 | message | ||

| 2020-08-04 | TJ | rogers | 9812768934 | message | ||

| 2020-08-04 | IT | at&t | 9812768991 | message | 2020-08-04 | 9812768991 |

| 2020-08-04 | NR | rogers | 9812768997 | message |

3. Calculate the percentage of valid confirmations

We need to find the percentage of valid confirmation messages vs. all messages from the fb_sms_sends table.

Looking at the output of the previous step, we know that valid confirmation records from the fb_confirmers table have date and phone_number values.

We can use the COUNT() aggregate function to find the number of valid confirmation records and the number of all messages.

Provide phone_number or date columns as an argument to the COUNT() function to find the number of records with values that are not NULL.

Repeat the same to find the number of all message records. Only this time, the argument to the COUNT() function should be one of the five columns where all records have values.

Then divide two aggregates, and you’ll have a decimal ratio. Finally, multiply the ratio by 100 to get the percentage value.

SELECT COUNT(b.phone_number)::float / COUNT(a.phone_number) * 100 AS perc

FROM fb_sms_sends a

LEFT JOIN fb_confirmers b ON a.ds = b.date

AND a.phone_number = b.phone_number

WHERE a.ds = '08-04-2020'

AND a.type = 'message'The result of the COUNT() function is always a whole number. Don’t forget to convert it to a float so it can be divided by another whole number.

Finally, use the AS keyword to give the final column a descriptive name.

Output

Query should return one row with a single percentage value.

All required columns and the first 5 rows of the solution are shown

| perc |

|---|

| 20 |

Question 7: Find the first 50% of the dataset

In this question, candidates have to return the first half of all rows in the table.

First 50% of Records From Dataset

Find the first 50% records of the dataset.

Link to the question: https://platform.stratascratch.com/coding/9859-find-the-first-50-records-of-the-dataset

Understand the question

The premise is fairly simple - we need to return the first half of all rows in the dataset.

Analyze data

We are given one table named worker.

Let’s look at the types of values in every column:

To answer this data engineer interview question, we don’t need to work with any of these columns. We just have to return the first half of all rows.

While looking at data, you might notice that every record seems to have an incremental worker_id value.

This is a limited example with only 8 rows. In reality, data engineers work with much larger datasets.

Plan your approach

To get the first 50% of all rows, first, we must find the total number of rows in the table.

Once we do, we can divide the total by 2. This will give us half of all rows, let’s call this number X. We can tell SQL to return the first X number of rows, but not more.

Rows already have worker_id values, which appear unique and incremental. We can set a condition to return records where the worker_id value is less than X (a number that represents half of all records). However, there are no guarantees that these values are unique and/or incremental.

Instead, we can use window functions to assign each row a unique incremental number and tell SQL to return records with numerical values of less than X (half of all records).

Write the code

If you like a challenge, try to write a query yourself.

Output

The query should output four records because the example data showed 8 records.

All required columns and the first 5 rows of the solution are shown

| worker_id | first_name | last_name | salary | joining_date | department |

|---|---|---|---|---|---|

| 1 | Monika | Arora | 100000 | 2014-02-20 | HR |

| 2 | Niharika | Verma | 80000 | 2014-06-11 | Admin |

| 3 | Vishal | Singhal | 300000 | 2014-02-20 | HR |

| 4 | Amitah | Singh | 500000 | 2014-02-20 | Admin |

| 5 | Vivek | Bhati | 500000 | 2014-06-11 | Admin |

| 6 | Vipul | Diwan | 200000 | 2014-06-11 | Account |

Question 8: Formatting Names

This question involves cleaning up data and ensuring its consistency.

First Name with Left White Space Removed

Print the first name after removing white spaces from the left side.

Link to the question: https://platform.stratascratch.com/coding/9831-formatting-names

Understand the question

The task is fairly simple - remove unnecessary white spaces from the left side of first name values.

The question asks us to print (return) trimmed values.

Analyze data

Let’s start by looking at the table and dataset available for this question:

To solve this data engineer SQL interview question, we are only concerned with values in the first_name column.

Plan your approach

We need to take first name values and trim them.

The question tells us to remove white spaces only from the left side of first name values. So the standard TRIM() function is not going to work.

Write the code

Solving this challenge would be a great practice before going into a data engineering interview.

Try to write the query yourself:

Output

The query should return only trimmed first name values and nothing else.

All required columns and the first 5 rows of the solution are shown

| first_name_trim |

|---|

| Monika |

| Niharika |

| Vishal |

| Amitah |

| Vivek |

| Vipul |

| Satish |

| Geetika |

| Agepi |

Question 9: Rows With Missing Values

In this data engineer SQL interview question, we have to find and return records with multiple empty columns.

Rows With Missing Values

Last Updated: April 2022

The data engineering team at YouTube want to clean the dataset user_flags. In particular, they want to examine rows that have missing values in more than one column. List these rows.

Link to the question: https://platform.stratascratch.com/coding/2106-rows-with-missing-values

Understand the question

The objective is clear - identify rows with more than one missing value and return them.

Analyze data

Let’s look at columns in the user_flags table:

As you can see, all columns contain text values.

We’ll have to check all columns to find out whether they are empty or have values in them.

The last record has two empty columns. It is a prime example of the kind of records we’re looking for.

Plan your approach

The question asks us to return rows with missing columns, so we need to:

- Use the WHERE clause to remove rows that don’t have two or more missing values.

- Set up a complex condition (using CASE statement) to identify and keep count of empty columns for each row.

Write the code

Try to write the query yourself.

Output

The final query should return all the records with more than one missing value.

All required columns and the first 5 rows of the solution are shown

| user_firstname | user_lastname | video_id | flag_id |

|---|---|---|---|

| Courtney | dQw4w9WgXcQ | ||

| Gina | Korman | ||

| Greg | 5qap5aO4i9A | ||

| Ct6BUPvE2sM |

Question 10: Find the combinations

As a data engineer, sometimes you’ll have to prepare data for analysis. For example, extract data that meets certain criteria.

Find The Combinations

Find all combinations of 3 numbers that sum up to 8. Output 3 numbers in the combination but each combination must use three different rows (do not reuse the same record).

Link to the question: https://platform.stratascratch.com/coding/10010-find-the-combinations

Understand the question

You might have to read this data engineer SQL interview question multiple times to understand it fully.

We are given a list of numbers and need to find and output various combinations of three numbers that add up to 8.

There is an extra condition - we can not add a number to itself. Every number in the equation must be unique.

Analyze data

The transportation_numbers table is essentially a list of numbers. It has two columns, index and number, and both of them contain integer values.

We need to go through the list and pick three that add up to 8.

It looks like all values in the number column are below 8.

Plan your approach

The initial table has one column with numbers.

We need to combine the table with itself three times, so that every record contains three number values.

If you use JOIN to combine tables, you can set the ON condition to ensure that all three numbers are different from each other.

Finally, you need to set up a filter that checks if three number columns add up to 8.

Write the code

Try to solve this interesting challenge yourself.

Output

All required columns and the first 5 rows of the solution are shown

| num_1 | num_2 | num_3 |

|---|---|---|

| 5 | 0 | 3 |

| 3 | 4 | 1 |

| 3 | 1 | 4 |

| 3 | 0 | 5 |

| 7 | 0 | 1 |

| 0 | 3 | 5 |

| 1 | 2 | 5 |

| 1 | 7 | 0 |

| 1 | 0 | 7 |

| 4 | 1 | 3 |

| 0 | 7 | 1 |

| 4 | 3 | 1 |

| 1 | 5 | 2 |

| 0 | 1 | 7 |

| 5 | 3 | 0 |

| 7 | 1 | 0 |

| 2 | 5 | 1 |

| 1 | 3 | 4 |

| 0 | 5 | 3 |

| 5 | 1 | 2 |

| 3 | 5 | 0 |

| 1 | 4 | 3 |

| 5 | 2 | 1 |

| 2 | 1 | 5 |

Summary

Hopefully, by now, you better understand the day-to-day responsibilities of a data engineer and what a data engineer does and the importance of their work.

To do the job successfully, data engineers need to have a very specific set of skills, one of them is SQL. In this article, we listed the most important SQL concepts and walked you through data engineer SQL interview questions asked by reputable employers.

Practice on these questions to maximize your chances of landing a data engineer job. Go to the Data Engineer Interview Questions post on the StrataScratch blog to find more questions to solve. The platform has hundreds of SQL interview questions of various levels of difficulty. You can write queries, check their correctness, get hints and guidance all within one platform. Soon enough, you’ll master SQL and be prepared to solve any interview questions with confidence.

Share